Few-shot prompting is a technique that involves providing a few examples of the desired output format or task in the prompt itself. This technique is particularly useful when the user has a very specific or repetitive task.

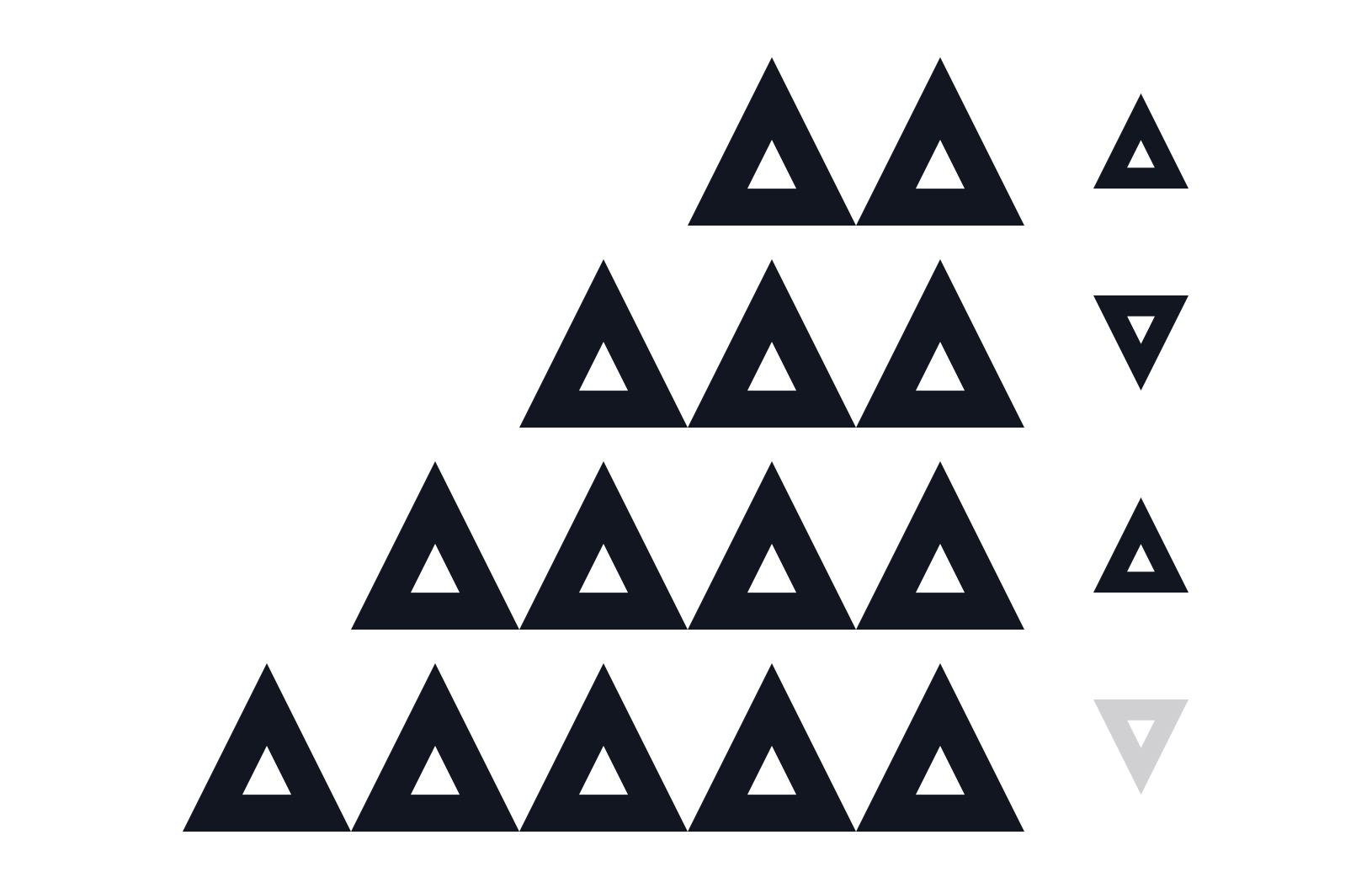

Prompting a model with zero examples is called "zero-shot" prompting, while prompting with a certain number of examples is called "few-shot" prompting. The term can also be used as "one-shot" prompting if there is one example, "two-shot" prompting if there are two examples, and so on.

For example, instead of prompting the model (zero-shot) with a question like:

You could provide a few examples of similar questions (few-shot/three-shot) like:

This allows the model to better learn and generalize the pattern for that specific task, enhancing the relevance and quality of its outputs.

The number of examples can also vary depending on the complexity of the task and the model's capabilities.

Few shot prompting can also be used for classification tasks, where the prompt includes examples of different classes or categories to help the model understand the task better.

For example, to classify a text as a positive or negative statement, you could provide a few examples of positive and negative sentences (few shot/four-shot) in the prompt, such as:

Few shot prompting works best for well-defined tasks with clear patterns or structures that can be learned from a few examples, such as basic question answering, text classification, and similar tasks.